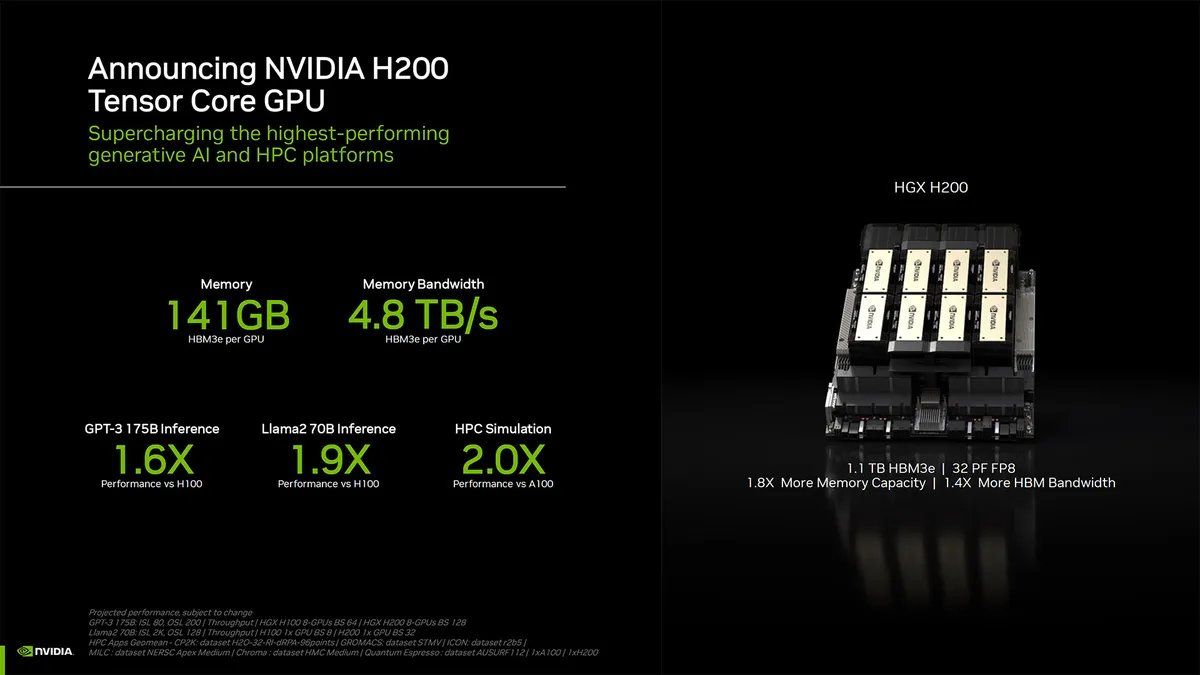

Nvidia Unveils H200 AI Chip Advancements

Nvidia unveiled a new iteration of its premier artificial intelligence chip, the H200, on Monday. Set to roll out gradually next year, this enhanced chip is poised to outpace its predecessor, the H100, with Amazon.com (AMZN.O), Google (GOOGL.O), and Oracle (ORCL.N) slated as key partners.

The focal point of improvement in the H200 lies in its augmented high-bandwidth memory, a critical element determining the chip’s data processing prowess. This advancement is particularly pivotal for applications like OpenAI’s ChatGPT service, which heavily relies on the capabilities of Nvidia’s AI chips.

Substantial Upgrade: Accelerated Data Handling and Swifter Responses

With a substantial upgrade from 80 gigabytes to an impressive 141 gigabytes of high-bandwidth memory, the H200 promises accelerated data handling and swifter response times. While Nvidia remains tight-lipped about the memory suppliers for the new chip, Micron Technology (MU.O) has previously expressed its aspiration to become a supplier for Nvidia.

In the realm of memory procurement, Nvidia engages with SK Hynix (000660.KS), a South Korean firm that reported a sales boost last month, crediting the resurgence to the escalating demand for AI chips.

In a subsequent announcement on Wednesday, Nvidia revealed that major cloud service providers such as Amazon Web Services, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure will be at the forefront, offering access to the H200 chips. This expansion also includes specialized AI cloud providers like CoreWeave, Lambda, and Vultr, effectively widening the reach of Nvidia’s cutting-edge AI technology.

Read More (Tech)